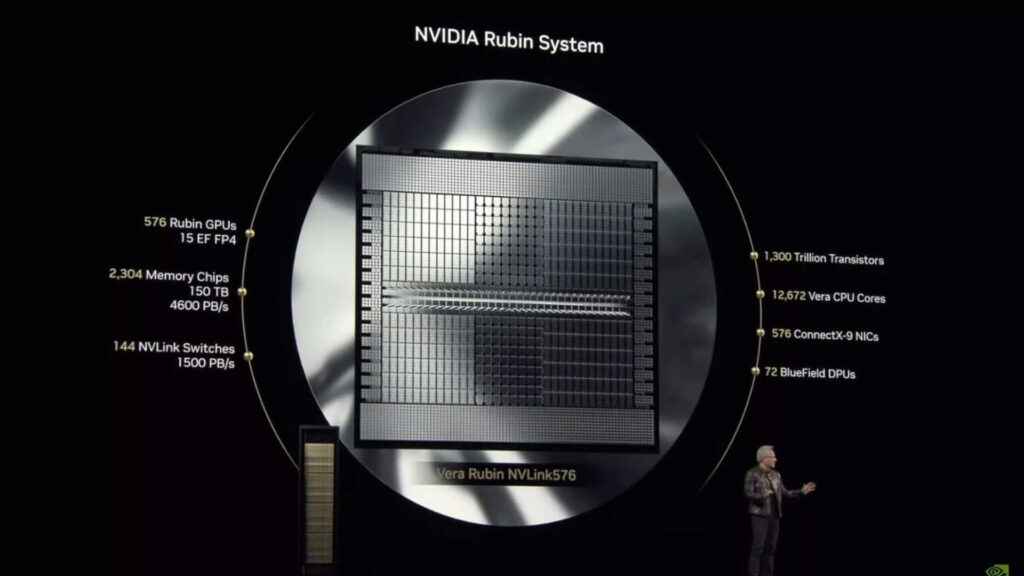

Nvidia has rolled out its groundbreaking Rubin CPX GPU, designed specifically for massive-context AI tasks like million-token code analysis and video generation, as part of its integrated Vera Rubin NVL144 CPX rack platform that delivers a staggering 8 exaflops of AI performance, 100 TB of fast memory, and 1.7 PB/s memory bandwidth in a single rack, offering significant improvements over the previous GB300 NVL72 systems.

Sources: IT Pro, Nvidia News, Reuters

Key Takeaways

– Purpose-Built for Long-Context AI: Rubin CPX is optimized to handle long, million-token context workloads like full-hour video and massive codebases, integrating custom video decode/encode and AI inference in one chip.

– Enormous Rack-Scale Performance Boost: The Vera Rubin NVL144 CPX platform delivers ~8 exaflops of compute, 100 TB fast memory, and 1.7 PB/s bandwidth—over 7.5× the AI performance of the prior GB300 NVL72 system.

– On-Schedule 2026 Rollout: Rubin architecture, including Rubin CPX and accompanying CPUs/networking, is set for production and launch in late 2026, following the Rubin tape-out at TSMC and Nvidia’s shift to annual AI chip releases.

In-Depth

When nuts-and-bolts TV stars roll out, tech folk perk up—and Nvidia just dropped a real eye-opener with Rubin CPX, a GPU specifically crafted for massive-context AI workloads. Think whole-hour video inference or analyzing sprawling codebases—tasks that demand context sizes the old GPUs simply strain under. What makes Rubin CPX special is that it isn’t just raw compute; it’s also packing built-in video decoders and encoders alongside its AI inference engines, all crammed into a single monolithic die for top-notch efficiency and throughput.

This GPU finds its home in the Vera Rubin NVL144 CPX platform—a full-scale rack, not just a card, delivering a mind-blowing 8 exaflops of AI performance, 100 TB of blazing fast memory, and a bandwidth of 1.7 petabytes per second. That’s over 7.5 times the muscle of Nvidia’s previous GB300 NVL72 setup. That kind of firepower doesn’t come cheap—or small—but it changes the game for data-center scale AI inferencing.

What’s more, all signs point to a 2026 rollout. Nvidia taped out the Rubin GPU, Vera CPU, and the supporting networking and magic behind the NVL144 platform, meaning manufacturing is underway on time for mass production next year. This also marks a strategic shift: Nvidia is now delivering new AI chip families annually, accelerating development pace to keep up with the rapacious growth in demand.

Bottom line? Rubin CPX and the Vera Rubin platform represent a confident flinch forward into AI’s long-context frontier, banking on massive memory capacity and insane performance to outpace competitors and serve a new generation of AI applications.