It’s getting harder to spot the squiggly-letters, checkboxes and “select all the images with stoplights” puzzles that once dominated the internet, because the classic CAPTCHA is vanishing—and for good reason. According to a recent article in Wired, security firms like Cloudflare and reCAPTCHA have shifted to background risk-scoring systems that silently evaluate user behaviour and device signals instead of interrupting users with visible puzzles. The article argues this transition is driven by bots and AI that solved old CAPTCHAs too quickly, making them ineffective—and user frustration soared. Meanwhile other analyses suggest the move to “invisible” verification is raising fresh questions about privacy, data-tracking and how much users know about what’s happening. Such change in the quiet machinery of the web may significantly impact user experience, site security strategy and regulatory oversight. Other industry reports detail how bot traffic and AI malware are forcing a rethink of web-forms and anti-bot defences and how firms are moving toward behavioural and sensor-based verification instead of puzzles.

Key Takeaways

– Traditional CAPTCHAs—images of traffic lights, distorted letters etc.—are being phased out because advanced bots and AI models can solve them too reliably, undermining their effectiveness.

– The new wave of anti-bot defence relies on “invisible” or behind-the-scenes signals (device fingerprinting, behavioural metrics, risk scores) which reduce user-friction but raise privacy and transparency concerns.

– With the decline of visible CAPTCHAs, organisations must reassess how they secure web forms and user interactions, while regulators and users gauge the trade-off between convenience, security and data collection.

In Depth

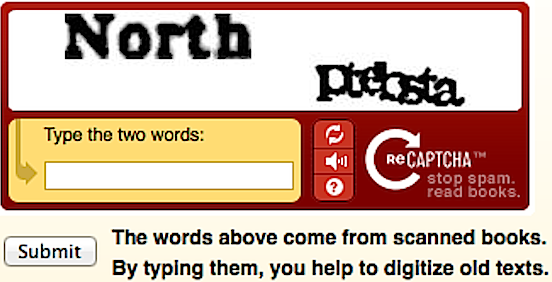

For years the CAPTCHA served as a frontline filter: you know the drill—type in the distorted words, pick out all the crosswalks, click “I am not a robot.” It was designed to separate flesh-and-blood humans from bots, giving websites a straightforward test built into the interaction flow. But as a recent article in Wired explains, we’ve all but stopped seeing such tests in 2025. Why? Because the underlying tech got out-paced. Bots and AI models are now smart enough to solve even the tricky ones, and the human cost—frustration, accessibility issues, abandonments—became too high. (see wired.com story)

That prompted a shift. Major providers of CAPTCHA-style challenges—Google‘s reCAPTCHA, Cloudflare’s Turnstile, and others—started to bake verification logic into the background. Instead of asking you to perform a puzzle, the system checks device data, browsing patterns, mouse movement, cookies and other signals to assign a “risk score.” If you look like a legitimate human from a trusted device and past history, you pass silently. If not, you might get a visible challenge or be blocked. Wired quotes Cloudflare’s Reid Tatoris: “Clicking the button doesn’t at all mean you pass… that is a way for us to gather more information.”

On the one hand, this is good: fewer frustrating puzzles, smoother user flows, fewer abandonments, especially for users unfamiliar with weird image grids. On the other hand, it introduces subtle but important questions: what signals are being collected behind the scenes? Is device-fingerprinting or behavioural analysis invading privacy? An article from WebProNews flags exactly that: the disappearance of visible CAPTCHAs is part of a broader trend of AI-integrated security, where biometrics and tracking data become default, and privacy regulators are starting to scrutinize the practice.

Meanwhile defenders are facing a stark reality: bots haven’t gone away; they’ve simply adapted. A TechRadar piece highlights how AI-powered malware and bots now routinely bypass older CAPTCHA systems, making the problem far bigger. If bots can pretend to be humans—or use human-like behaviours—then visible puzzles alone don’t cut it anymore. That means organisations need to deploy layered defences: background risk scoring, real-time monitoring, intent-based filtering, and perhaps novel challenge types that humans can pass but bots can’t.

For web users this evolution means less visible friction—but more unseen checks. You may not notice that you’re being scored or assessed for “human-ness,” but you are. For web businesses and platform builders, the shift means redesigning user flows, auditing third-party risk tools, ensuring accessibility and transparency, and paying attention to privacy laws and consent. In short, the familiar “click all the bikes” CAPTCHA may be going away, but the battle between bots and human gating is simply becoming more hidden and more algorithmic.

For our digital-first world, this signals a subtle but wide-ranging change. The next time you log into a site and breeze right through the “human check,” it may not be because little has changed—it’s exactly because a lot has changed behind the scenes. The implications are both practical (fewer user headaches, more streamlined UX) and sober (less transparency, more background data collection). As this trend spreads, both users and businesses will need to adjust expectations around what “verification” means in 2025 and beyond.