Australia is rolling out sweeping new rules to require age verification for logged-in users on search engines beginning December 2025, aiming to restrict under-18s from seeing pornography, high violence, self-harm content, and more. But the Digital Industry Group (DIGI) says that sex education and other educational or health-oriented content won’t be covered under these restrictions, since it does not count as “Class Two” material under the Online Safety Act. The broader regulatory scheme is nested within the Online Safety Act and new industry codes that aim to balance child protection with preserving legitimate access to useful information.

Sources: Epoch Times, ABC (AUS)

Key Takeaways

– The new codes require search engines to activate age-assurance tools for logged-in users and enforce “safe search” modes for those deemed under 18.

– Sex education, health promotion, and educational content are explicitly excluded from the kinds of material that can be filtered under the new rules.

– Critics warn the regulation may create overreach, privacy risk, or accidental blocking of legitimate health information despite its stated exemptions.

In-Depth

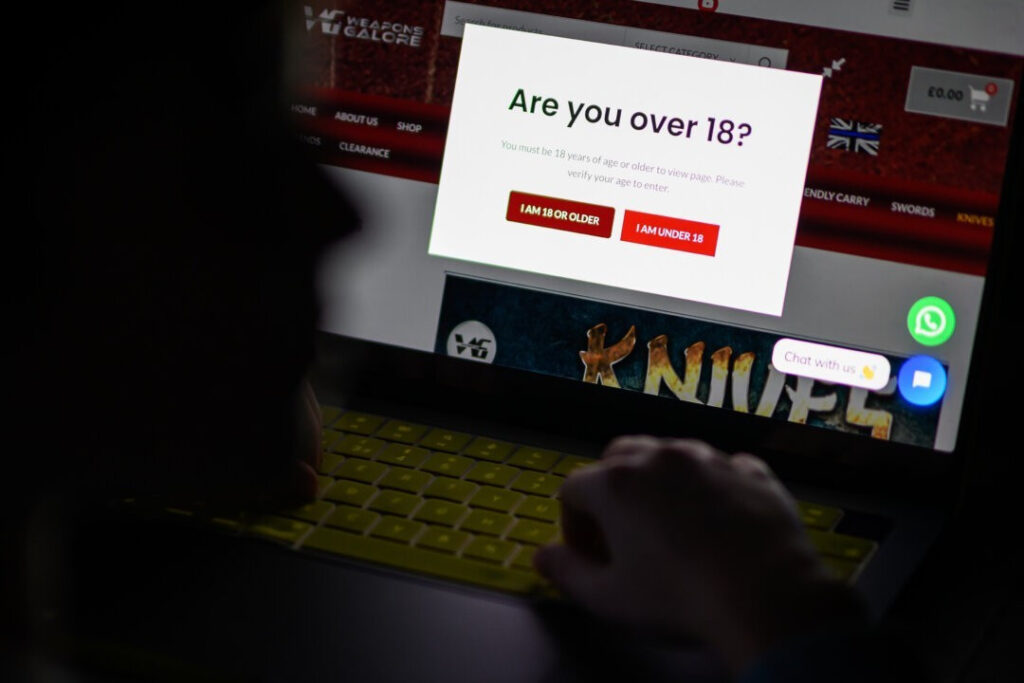

Australia’s push to tighten online safety is entering a bold new phase. Beginning December 27, 2025, search engines like Google and Microsoft will be required to apply age assurance systems for logged-in users. For those flagged as under 18, the systems must default to safe search settings, suppressing access to adult content, violence, self-harm, or other age-inappropriate material. This move is part of a wider regulatory wave under the Online Safety Act, with complementary codes covering social media, hosting services, app platforms, and device environments. (ABC News)

Despite this strong clampdown, not all content is caught in the net. According to statements from the Digital Industry Group (DIGI), sex education and similar content deemed “useful or educational” will be spared the filtering regime, as they fall outside the definition of “Class Two” restricted content under the Online Safety Act. The Epoch Times reports that DIGI has clarified that the new rules will not “capture information deemed useful or educational.”

Still, questions linger over how neatly the exclusions will hold in practice. Experts caution that real-world filtering mechanisms often overblock or misclassify content, and that the boundary between disallowed and allowed material is blurry—especially when dealing with sexual health, gender education, or harm-reduction content. (Guardian) Some public health advocates note that young people rely on online resources for sex education and mental health guidance, warning that any misstep could inadvertently cut off vital knowledge.

Privacy, too, is a major concern. Enforcing age checks often involves facial recognition, biometric systems, identity documents, or inference from data—raising alarms about user profiling, data retention, and potential misuse of personal information. The Guardian has flagged the technical and civil liberties implications of such systems. The codes are developed as industry codes co-regulated under eSafety, not passed via full parliamentary legislation, which means scrutiny and public debate may be limited.

In sum, Australia’s new online safety regime seeks to thread a fine needle: strongly curbing minors’ exposure to harmful content while carving out an explicit safe harbor for education and health content. Whether the design will succeed—or suffer from collateral damage to free access and privacy—remains to be seen.