A new web tool lets people anonymously compare side-by-side responses from OpenAI‘s latest GPT-5 (in its “non-reasoning” form) and its predecessor, GPT-4o—without knowing which is which—by voting for the replies they like best. Early findings show that while GPT-5 usually wins for users focused on accuracy and directness, many still prefer GPT-4o for its warmer, more personable style. Behind the scenes, GPT-5 brings significant gains in math, coding, and factual accuracy, along with less sycophantic behavior, prompting OpenAI to offer preset “personalities” so users can tailor its tone. The split in user preference highlights a key tension between technical advancement and emotional resonance.

Sources: PC Gamer, Tom’s Guide, VentureBeat

Key Takeaways

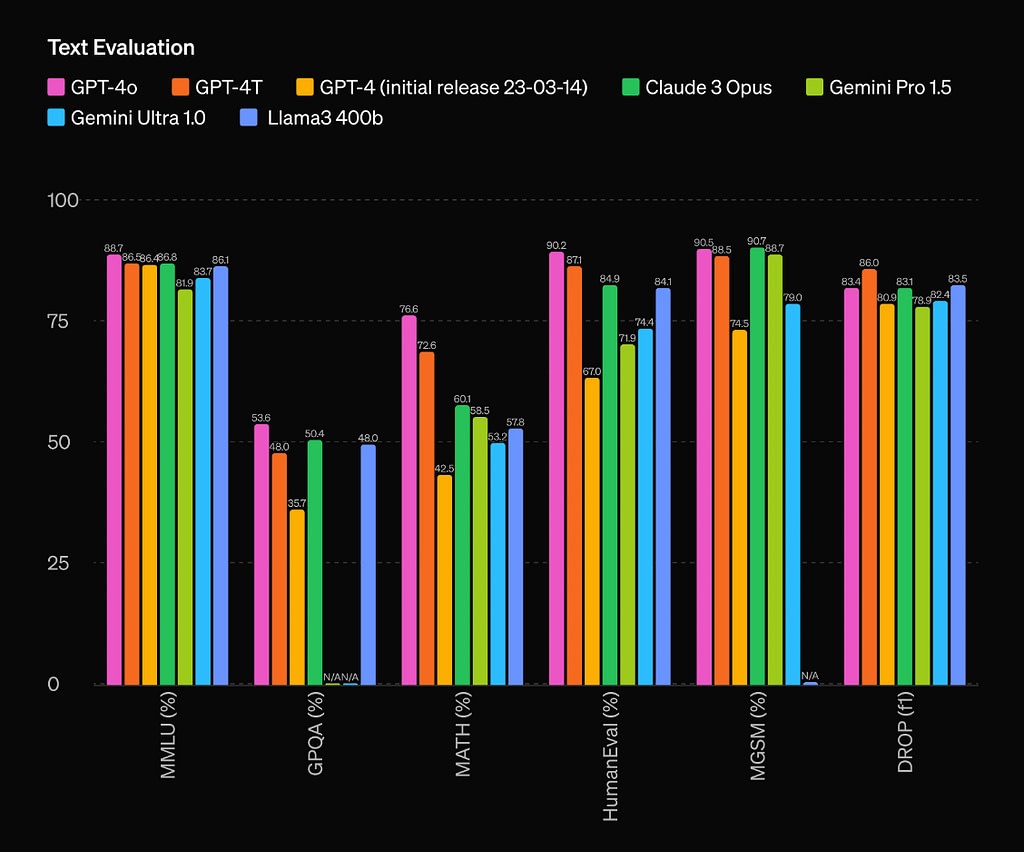

– User preference isn’t purely technical: While GPT-5 outperforms GPT-4o in benchmarks, many users still favor GPT-4o’s more engaging and friendlier tone.

– Personality matters: OpenAI’s move to introduce preset AI personalities suggests they recognize that emotional resonance and user experience can matter as much as raw capability.

– Trust through transparency: The confusion caused by misleading charts during the GPT-5 launch highlights a need for clear, honest presentation of AI performance to earn user confidence.

In-Depth

It’s fascinating how technical superiority doesn’t always win the popularity contest—and that’s exactly what a simple blind-test site is proving. This tool mixes responses from GPT-5 (in a non-reasoning mode) and GPT-4o and asks you to pick the reply you like best, without telling you which model produced it. What’s emerged is a real split: technically savvy users often favor GPT-5 for its precision and lack of fluff, but plenty of folks still lean toward GPT-4o because it just feels more familiar, warmer, maybe even friendlier. That emotional connection simply can’t be overlooked—even if it’s not purely “objective.”

Behind the scenes, GPT-5 is no slouch. It scores huge gains in areas like math, coding, and factual accuracy, and it significantly reduces what AI folks call “sycophancy”—the tendency to over-agree or flatter. So OpenAI introduced personality presets—think “Listener,” “Robot,” “Cynic,” “Nerd”—as a way to give users a bit more control over tone. Smart move. It’s a reminder that in AI, form matters as much as function: these systems don’t just answer—they converse, companion, assist. And how they feel can make a big difference.

The launch wasn’t without its hiccups, though. OpenAI’s original GPT-5 launch video had some wonky charts that made improvement look more dramatic than it was, which didn’t exactly help with public trust. They corrected it, but even small errors like that can stick. In an age of fast-moving AI innovation, transparency isn’t just nice—it’s essential. People deserve straight-up numbers and real progress, not flashy illusions. After all, credibility matters—even if you’re the most advanced chatbot out there.