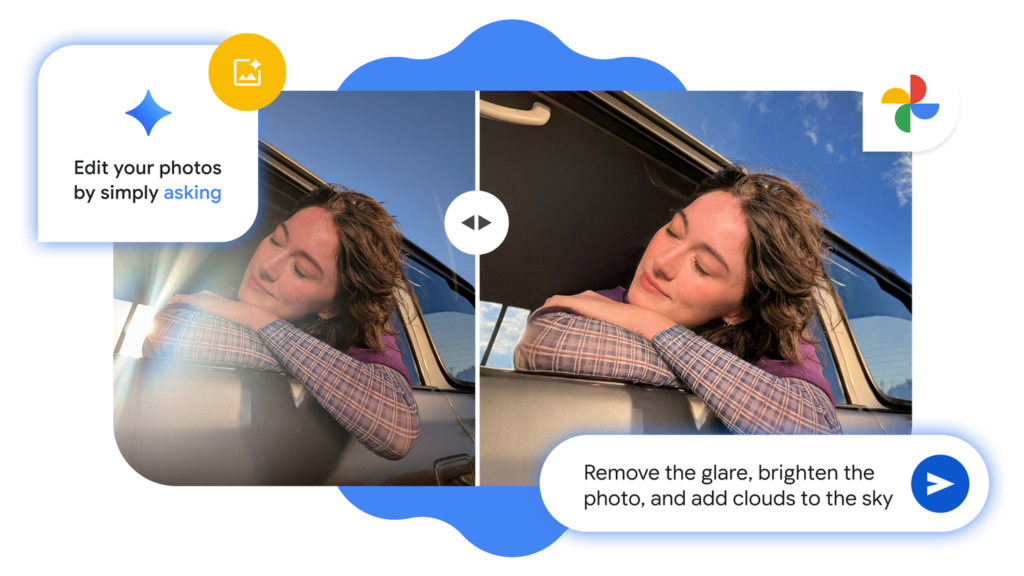

Google has expanded its Gemini-powered conversational editing feature in Google Photos beyond the Pixel 10 family to include eligible Android phones in the U.S. The feature, accessed via a “Help me edit” option in the editor, allows users to issue commands—by voice or text—to make edits like removing background objects, adjusting lighting, or restoring older photos, instead of manually using sliders or individual tools. To use it, users must enable “Ask Photos” and opt into Gemini; some settings like location estimates and Face Groups may also need to be turned on.

Sources: The Verge, Thurrott.com, Android Authority

Key Takeaways

– The rollout means the conversational editing feature is no longer exclusive to Pixel 10 devices—it’s now available to a wider set of Android users in the U.S., provided they meet eligibility requirements.

– Users must opt in: enabling “Ask Photos” and agreeing to use Gemini is required, plus some features like location estimates and Face Groups must be active.

– While convenient and powerful, editing via natural language or voice works best with clearer prompts, and imprecise commands may lead to less predictable results. The feature places more burden on Google’s AI to interpret vague instructions.

In-Depth

Google’s new conversational editing tool in Google Photos marks another step toward simplifying how people interact with digital media. Previously, the feature that lets you just ask your phone to change your photo—say “remove the glare,” “make it more vibrant,” or “restore this old photo”—was limited to the Pixel 10 line. But as of late September 2025, Google has made it available to eligible Android phones across the United States.

At its core, this editing method trades traditional manual controls—sliders, tool palettes, masking, etc.—for a natural language (or voice) interface. The idea is that instead of knowing which slider to move, or which tool to pick, you simply tell Photos what you want done. The Gemini AI model handles the rest. That carries obvious advantages: speed, accessibility (especially for users who don’t want to learn all the editing tools), and perhaps, creativity. But there are trade-offs: vague prompts can lead to unexpected results, and sometimes the AI may need iterative refinement from the user.

From the user perspective, you’ll need to opt in. Enabling “Ask Photos,” agreeing to use Gemini, turning on location estimates, and enabling Face Groups are among the settings you may need. Once that’s done, the “Help me edit” option appears in the editor. But it may take some time after opting in for the option to show up. It’s also clear that for now, this feature is U.S.-only for Android. There’s no confirmed international timeline (or iOS rollout) yet.

Technically, the rollout ties into Google’s broader push toward generative AI and Gemini’s increasing role in everyday user tools. In parallel, Google is also implementing transparency via metadata standards (e.g. C2PA Content Credentials), so that edited or AI-modified images can carry markers about how they were changed. This is an important move for trust, given increasing concerns over deepfakes, AI-manipulated images, and ethical uses of content editing.

In short: if you have an eligible Android phone in the U.S., this feature substantially lowers the barrier to producing polished photos. Whether for casual users who hate fiddling with technical settings, or for people who want quick fixes, conversational editing promises speed and usability. But for precision, or when exact control is needed, manual editing tools will still have their place.