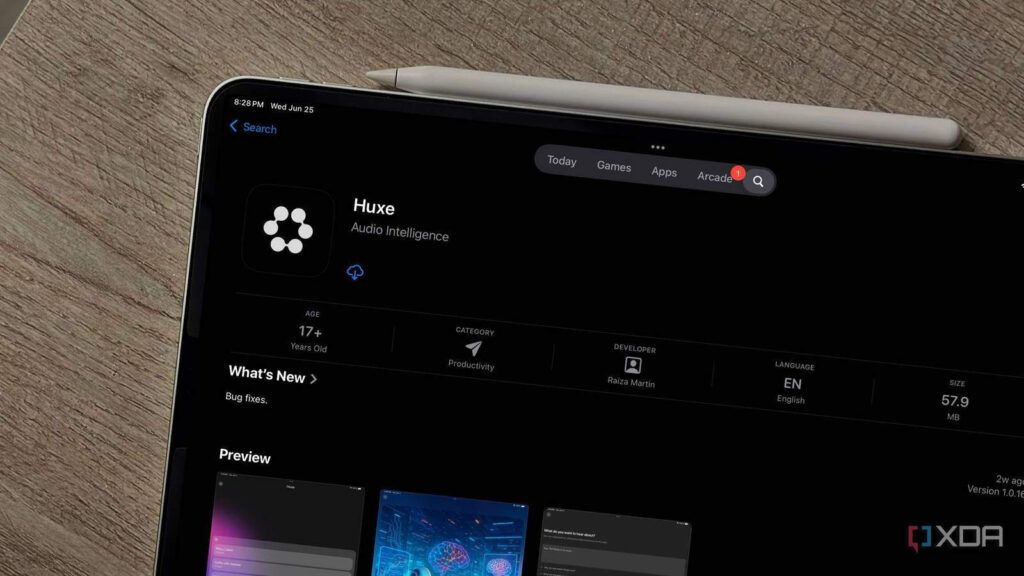

A fresh startup called Huxe is shaking up how we consume news and research by converting topics, emails, and calendars into interactive AI-led audio “podcasts.” Built by three engineers who formerly worked on Google‘s NotebookLM, Huxe launched broadly (after an invite-only phase) in mid-2025 and has already secured $4.6 million in funding from backers including Conviction, Genius Ventures, Dylan Field (Figma), and Google Research’s Jeff Dean. The app offers features like a daily personalized briefing drawn from your inbox and schedule, the ability to ask questions mid-audio, and live “stations” you can follow in topics such as tech, sports, or even celebrity gossip. While Huxe is positioned toward information rather than entertainment, its ambitions lie in pushing audio as a screen-free medium for deep consumption — though questions remain about privacy, model latency, and how well the generated narratives will hold up over time.

Sources: The Data Exchange, Google Play

Key Takeaways

– Huxe aims to transform how the public digests information by converting text, emails, and calendar data into conversational audio with interactive AI hosts.

– The startup has already raised $4.6 million and is backed by influential names, signaling confidence in the audio-first AI direction.

– Core challenges ahead include maintaining user trust around privacy, ensuring low latency in audio interactions, and preventing filter bubbles in the content fed to users.

In-Depth

The Huxe launch feels like a natural evolution of the NotebookLM concept — turning dense information into narrated audio — but refined and made more interactive. The fact that three of the original NotebookLM engineers are behind Huxe lends both continuity and credibility to the project.Rather than simply summarizing documents, Huxe aims to embed itself into users’ daily rhythms: pulling from emails and calendar events to craft a morning briefing, then allowing followup questions and shifting topic direction mid-stream. You can think of it as marrying a personal assistant to a podcast producer, where you can steer the narrative as it plays.

From a consumer perspective, that’s compelling. Many of us already skim newsletters, open Slack threads, and juggle tabs; Huxe promises a more seamless way to digest that information — especially when you’re multitasking. The “live station” model is notable: you pick a topic and then Huxe continually taps sources so you’re always getting the latest on that subject without having to re-search. That feature may encourage users to deepen engagement in narrower domains, which is a double-edged sword: great for depth, but potentially risky from a filter bubble perspective.

On the technical side, Huxe faces nontrivial challenges. Real-time audio interaction (especially interruptible and adaptive) demands low latency and reliable model performance. From interviews, the team acknowledges this — they are working to parallelize tool calling with voice synthesis to minimize delay. Moreover, privacy is front and center. Because Huxe integrates with inherently sensitive user data (emails, schedules), the engineering approach is designed to ensure these inputs are used for personalization rather than global training. That’s reassuring, but user trust will have to be earned over time.

Another important design tension: how do you balance relevance with exposure to diverse ideas? If Huxe over-personalizes — reinforcing what you already read or believe — it might replicate echo chamber dynamics. In the broader field, systems like HearHere have explored how to surface diverse political perspectives to counter news polarization. Applying that thinking to AI-generated audio will be essential if Huxe wants to avoid simply amplifying confirmation bias.

From the market side, the early funding round is a strong vote of confidence. That said, success will depend on traction, retention, and stickiness. If people use Huxe daily — especially during home routines, commutes, or “screen-down” times — it could establish a new medium. But adoption won’t be automatic: users will demand clarity on privacy, accuracy, and trust in the voices they’re listening to (both literally and figuratively).

So, where might Huxe go next? Expanding to more languages, voice diversity, richer integration (e.g. third-party tools or document libraries), or even embedding co-listening social features are all paths it could take. The question is whether it can maintain high standards of quality while scaling. In a crowded AI space, audio has lagged behind text and video — but that makes this moment unique. If Huxe nails the experience, it may become the default way many of us absorb and interrogate information without staring at screens.