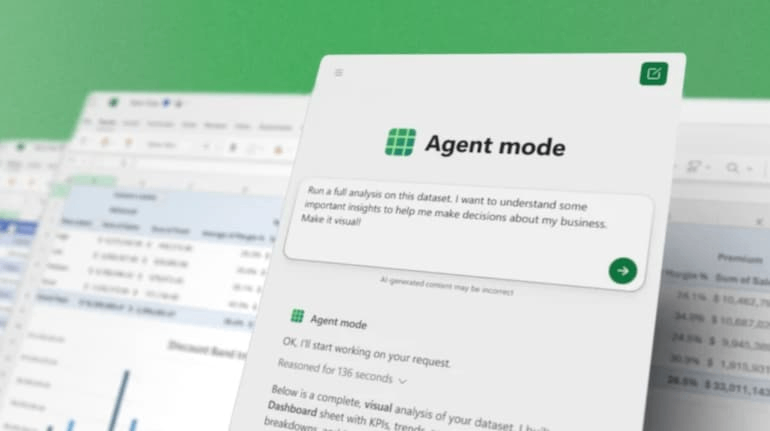

Microsoft is rolling out a new “vibe working” layer in Excel, Word, and Copilot chat, introducing an Agent Mode inside Office apps and a separate Office Agent (in Copilot chat) powered by Anthropic models. The move lets users issue a prompt like “create a monthly performance report” and have AI both plan and execute multi-step tasks—turning what once required human oversight into conversation-style productivity work. In Excel, Agent Mode supports auditable, modular operations (with a benchmark accuracy of 57.2 %) though still shy of human level. In Word, it offers interactive drafting and refinement. Separately, Office Agent, powered by Anthropic models, can build complete PowerPoint decks from chat. Microsoft is strategically adding Anthropic’s Claude Sonnet 4 and Claude Opus 4.1 models to its Copilot ecosystem (while continuing to use OpenAI models) and giving organizations model-choice control, even though using Anthropic’s models takes data out of Microsoft’s controlled environments—a move that raises governance, compliance, and data-risk concerns.

Key Takeaways

– Microsoft’s “vibe working” introduces agentic AI within Office apps—Agent Mode for Excel/Word and Office Agent for chat-based document creation.

– Anthropic’s Claude models are now integrated into Microsoft 365 Copilot alongside OpenAI’s models, giving users and enterprises choice of AI back end.

– The shift raises serious data governance questions, as Anthropic-powered agents may operate outside traditional Microsoft-controlled data environments.

In-Depth

Microsoft is taking a bold step in embedding more autonomous AI into its Office ecosystem. The concept of “vibe working” signals a shift from assistants that respond to prompts toward AI that acts on behalf of users: planning, executing, and verifying tasks with minimal human intervention. In Excel and Word, the new Agent Mode acts like an AI macro engine you can guide conversationally. In Excel, for instance, it can break down a prompt into sub-tasks, edit formulas, create charts, and validate results in a traceable pipeline. Microsoft reports a 57.2 % accuracy on spreadsheets via a benchmark called SpreadsheetBench—a level surpassing some competing agents but still below human performance. In Word, Agent Mode turns document construction into a dialogue; you might say, “Write a quarterly summary comparing this year to last,” and Agent will draft, refine, and clarify elements interactively. Meanwhile, Office Agent (used via Copilot chat) leverages Anthropic’s Claude models to generate full PowerPoint decks or Word documents from prompts, doing web research and showing live previews.

Part of Microsoft’s motivation appears strategic: diversifying away from exclusive reliance on OpenAI’s models. The company is now offering Anthropic’s Claude Sonnet 4 and Claude Opus 4.1 within Microsoft 365 Copilot, enabling users to switch models in contexts like “Researcher” or when building custom agents via Copilot Studio. Microsoft frames this as delivering “the best AI innovation from across the industry.” But offering model choice introduces new complexity. Anthropic’s models are hosted outside Microsoft’s infrastructure, so certain Microsoft guarantees around data residency, compliance, and auditability may not fully apply. Administrators must opt in and manage which users can access the alternate models, and organizations are rightfully wary about how enterprise data is handled when external models are allowed. Critics caution that loosening Microsoft’s data envelope could introduce risks around privacy, oversight, and regulatory compliance.

Still, Microsoft is betting that users will value smarter productivity over these trade-offs. By giving AI agency—letting it sequence tasks, verify results, and pivot mid-workflow—Microsoft hopes to shortcut workflows that usually take hours. Whether enterprises embrace or balk at the data implications will be a key metric of success in this next generation of AI-infused work.