A Stanford-led team has created a pioneering brain–computer interface that decodes a person’s inner monologue—silent, imagined speech—from neural activity in the motor cortex, achieving real-time interpretation of words with up to 74 % accuracy, without requiring any physical attempt to speak. This breakthrough could offer a lifeline for people with paralysis or speech impairments, enabling smoother, more natural communication. As control and privacy are paramount, researchers added an ingenious “password” trigger—users must think a specific phrase (like “chitty chitty bang bang”) before the device begins decoding, mitigating risks of unintended mind‑reading.

Sources: Live Science, Financial Times, Nature/Stanford Medicine

Key Takeaways

– No lip movement needed: This device deciphers thoughts imagined as speech—making communication smoother for those who can’t physically speak.

– Immediate privacy measure: A mental “password” must be imagined before inner speech is decoded, safeguarding against unintentional mind-reading.

– Future potential: Open pathways to restoring fluid, natural conversation for people with paralysis or severe speech impairments.

In-Depth

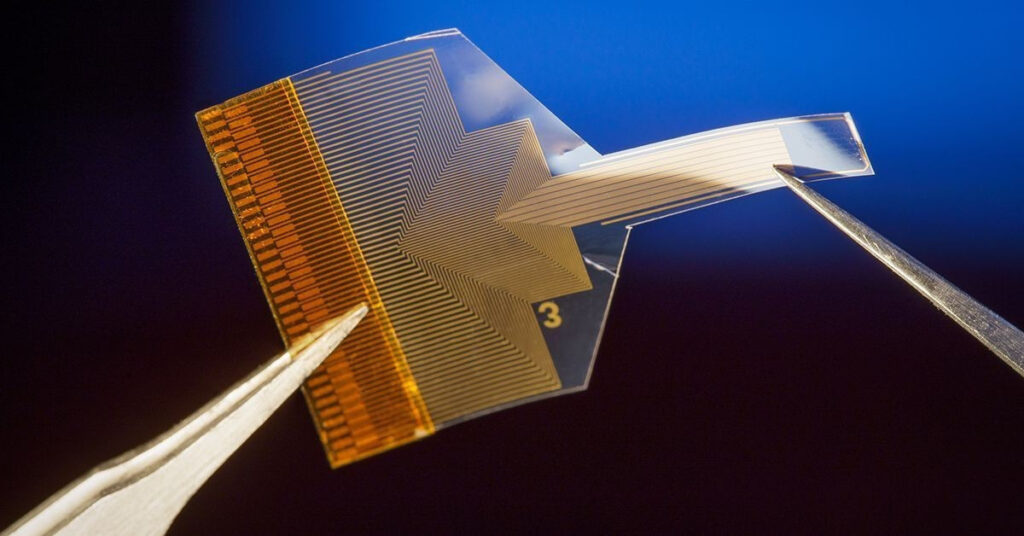

In a quietly revolutionary advance, Stanford researchers have turned inner speech into audible—or at least recordable—communication for individuals who cannot speak. By implanting microelectrode arrays into the motor cortex—the brain’s speech control hub—they created a brain–computer interface capable of decoding imagined speech patterns, with a real-time accuracy of up to 74%. Importantly, this isn’t guesswork—it’s based on actual neural signals associated with silent speech, not lip or vocal effort, making it markedly more intuitive and less fatiguing.

Ethics and privacy play a starring role here. Recognizing the potential for unintended thoughts to spill out, the team added a clever safeguard: users must “unlock” the device by imagining a predetermined “password” before the system begins decoding inner speech. That level of control helps build trust and ensures only intended thoughts are shared.

For people rendered voiceless by ALS, stroke, or other conditions, this could be nothing short of life-changing—offering spontaneous, fluid communication when every other route fails or falters. While early trials focused on pre-selected vocabularies, the trajectory toward more expansive and natural language decoding seems clear. With privacy, ethics, and user agency built into the foundation, this innovation strikes a promising balance between technological possibility and human dignity.