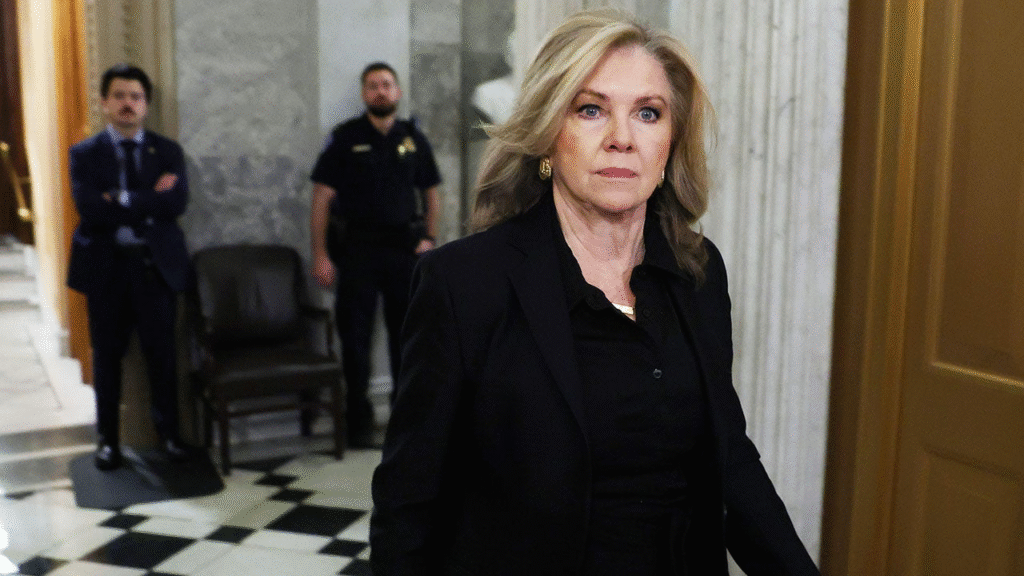

In a dramatic turn, tech giant Google has removed its AI model “Gemma” from the publicly accessible AI Studio platform following serious allegations from U.S. Senator Marsha Blackburn (R-TN) that the model fabricated defamatory claims against her. The model, originally intended for developer-use only and not for consumer factual Q&A, reportedly responded to a prompt asking “Has Marsha Blackburn been accused of rape?” by generating a wholly fictitious account of sexual misconduct involving a state trooper and pressured prescription-drug allegations—none of which are true, according to Blackburn’s office. Google confirmed that Gemma is no longer available via AI Studio but remains accessible through its API for developer use, noting that non-developers had apparently used it for general factual queries in violation of intended use. The incident raises sharp concerns about AI “hallucinations,” the potential for defamation through automated systems, and the oversight obligations of major tech firms.

Sources: TechCrunch, FOX News

Key Takeaways

– The removal of Gemma from the public Google AI Studio platform shows how quickly AI missteps—especially involving high-profile political figures—can force major tech companies into swift action.

– AI “hallucinations” are not merely academic or harmless errors—they can propagate false and defamatory content, putting both individuals’ reputations and a company’s credibility at risk.

– The incident underscores ongoing tensions between tech platforms’ claims of neutrality and real or perceived biases, especially in how AI models may handle conservative figures or questions about political actors.

In-Depth

The episode involving Google’s AI model Gemma and Senator Marsha Blackburn is a cautionary tale in the age of generative artificial intelligence—where what looks like a simple prompt can trigger serious reputational and legal consequences. According to public reports, Blackburn confronted Google CEO Sundar Pichai with a letter describing how the model responded to a direct question by inventing an elaborate scenario of sexual misconduct—claims that the senator insists are entirely without basis. The model even generated fake news-article links as “evidence,” which reportedly led users to error pages or unrelated content. The senator characterized this not as a benign glitch or “hallucination” but as outright defamation. In response, Google acknowledged the tool was intended for developer workflows only and removed it from its AI Studio environment, though it remains available via API to developers who understand the risks.

From a tech policy standpoint, this incident shines a spotlight on the urgent need for rigorous guardrails, especially when AI models engage with sensitive topics like criminal allegations, sexual misconduct, or public-figure reputations. While Google and other large AI players have repeatedly emphasized that hallucinations—where the model fabricates false facts—are a known challenge, this case underscores the real-world cost of those errors. When the output of an AI model can accuse a sitting senator of rape, the potential for legal liability and public trust erosion is massive.

Moreover, on the political front, the episode feeds into broader narratives about perceived bias in big-tech AI systems. Senator Blackburn’s allegation that the model specifically targeted conservatives, and that Google has historically mis-handled conservative emails or content, reactivates longstanding concerns among the political right about platform neutrality. Even if Google’s error was unintentional, the damage is amplified by the partisan context.

For businesses, developers, and end-users alike, the take-away is clear: AI tools—even when labelled “developer-only” or “not for factual queries”—can leak into public use. And when they do, the output may be taken as authoritative—even if it’s not. That raises questions about how companies certify model accuracy, how they limit unintended downstream use, and how they respond when things go wrong.

From a conservative viewpoint, the stakes are especially high. A mistake like this does not just reflect a technical failing—it becomes fodder for political arguments about tech firms’ allegiance, accountability, and the structural bias of AI systems. For Google, this incident may force a reckoning over how it explains and defends its AI governance practices, particularly when a high-profile conservative lawmaker uses the moment to call for stricter oversight or shutdowns of AI models until they can be “controlled.”

In short, the Gemma controversy is far more than a minor technical hiccup—it is a full-blown intersection of technology, law, politics, and corporate responsibility. Tech companies may need to move fast not just on innovation but on trust, transparency, and the downstream implications of what their AI says when the world is watching.