A new technique developed by researchers at Zhejiang University and Alibaba, dubbed “Memp,” gives large-language-model-based AI agents a dynamic procedural memory—a continuously-updating, experience-driven memory of how to perform tasks rather than just what happened. This framework lets agents build, retrieve, and update task-relevant workflows, enabling them to learn from past successes and failures without starting from scratch each time. By streamlining complex, multi-step processes, Memp not only boosts success rates but also cuts down on trial-and-error, token usage, and overall complexity. Impressively, procedural memory generated by a powerful model like GPT-4o can also be transferred to smaller, more cost-effective models (e.g., Qwen2.5-14B), giving them enhanced performance at far lower computational cost. This lifelong-learning approach shows real promise for scalable, reliable enterprise automation.

Sources: arXiv.org, VentureBeat

Key Takeaways

– Procedural memory boosts efficiency and reliability: Agents learn how to perform tasks rather than just recalling data, cutting re-learning time, token usage, and complexity.

– Transferable experience across models: Memory distilled by a strong LLM like GPT-4o can be applied to smaller, cheaper models—making enterprise-grade automation more accessible.

– Procedural memory has limits: Full adaptability in unpredictable tasks likely requires supplementation with semantic and associative memory systems, per cognitive-computation research.

In-Depth

Artificial intelligence is getting smarter—and not just through bigger models, but through better memory. Memp, a new procedural memory framework from researchers at Zhejiang University and Alibaba, gives AI agents a memory of how to do things—not just a record of what they did. Instead of starting each new task from scratch, agents build a library of step-by-step procedures and script-like abstractions that they can retrieve and refine over time. This approach leads to better success rates, fewer steps, and lower costs—a powerful proposal for enterprise automation.

Here’s the smart twist: once a powerful model like GPT-4o builds that memory library, it can hand it off to a smaller, cheaper model such as Qwen2.5-14B. Suddenly, you get enterprise-level performance at a much lower price tag. That’s a practical, forward-looking solution—bridging the gulf between high power and economic scalability.

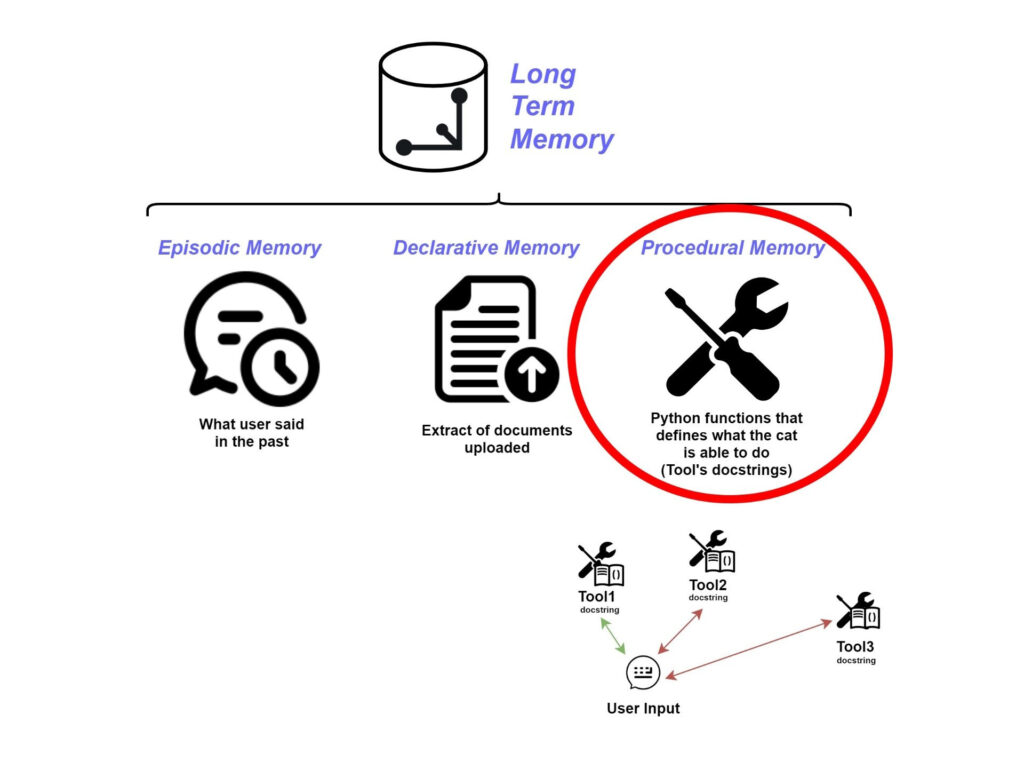

That said, procedural memory isn’t a panacea. Memp improves the “how,” but not always the “why.” In unpredictable, shifting environments—those “wicked” real-world tasks—agents may falter if they can’t understand semantics or draw associative links. Research argues that you need layered memory systems: procedural for skills, semantic for concepts, associative for context. Together, they’d give agents the adaptability and reasoning depth needed for truly autonomous, long-term deployment.

In short: Memp marks a meaningful step toward cost-effective, self-improving AI agents. It advances the “experience economy” of AI by making models smarter through memory—and smarter for less. Still, building agents that can think and adapt like humans will mean layering in richer cognitive memory systems.