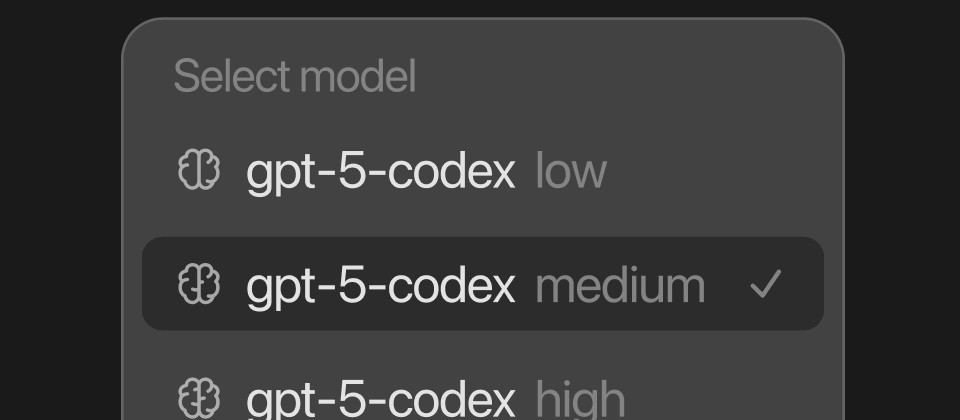

OpenAI has rolled out GPT-5-Codex, a specialized version of its GPT-5 model tailored for its Codex coding agent, designed to improve real-world software engineering workflows. The key improvements include letting the model spend variable “thinking” time—anywhere from a few seconds up to seven hours depending on task complexity—to enhance performance on benchmarks like SWE-bench Verified, code refactoring, and agentic coding tasks. Users on ChatGPT Plus, Pro, Business, Edu, and Enterprise plans already have access via terminals, IDEs, GitHub, or ChatGPT; API availability is coming later. The update also reduces errors in code reviews, increases high-impact feedback, and makes Codex more competitive with tools like Claude Code, Cursor, and GitHub Copilot.

Sources: TechRadar, TechCrunch

Key Takeaways

– GPT-5-Codex improves significantly over standard GPT-5 in tasks requiring deeper reasoning (especially code refactoring), providing more accurate bug detection and review feedback.

– The model dynamically adapts its reasoning time to task difficulty, which means simple tasks are handled quickly, complex ones more thoroughly—even over extended periods (up to ~7 hours).

– Access is being rolled out to paying customers first across multiple platforms (IDEs, GitHub, terminal, etc.), with API access coming later, positioning OpenAI for stronger competition in AI coding tools.

In-Depth

OpenAI’s new release, GPT-5-Codex, marks a notable shift in how AI is meant to assist in software engineering, with benefits that could reshape developer workflows in meaningful ways. Rather than treating all coding tasks the same, the model introduces adaptive reasoning time: lightweight jobs like simple syntax or bug fixes are handled swiftly, whereas larger, more complex refactoring or review tasks receive far more “thinking” time—up to seven hours in some cases. This flexibility not only improves raw performance on benchmarks like SWE-bench Verified, but also enhances the quality of code review feedback, catching more critical bugs before they reach deployment.

From a usability standpoint, OpenAI is pushing hard to ensure that GPT-5-Codex fits seamlessly into the environments developers already use: terminals, IDEs, GitHub, even via ChatGPT. Users on Plus, Pro, Business, Education, and Enterprise plans are getting access first. For those building tools or services on top of OpenAI via API, access is promised but lagging intentionally to ensure stable rollout.

One of the standout improvements comes in code refactoring: GPT-5-Codex moves the needle from about 33.9% refactoring success (in prior GPT-5) to 51.3% in the new version. That’s a meaningful jump for teams dealing with large legacy codebases or needing to modernize patterns.

Quality and reliability are also front and center. In code reviews, GPT-5-Codex tends to deliver fewer incorrect comments while producing more “high-impact comments,” meaning changes or suggestions that materially improve code quality. For developers, that reduces noise and increases trust. The reduced error rate in feedback could translate into fewer manual corrections and less risk of introducing regressions.

There are strategic implications too. The AI coding tools space is getting crowded—offerings like Claude Code, Cursor (from Anysphere), Microsoft‘s GitHub Copilot are all pushing forward. With GPT-5-Codex’s enhanced performance and deeper integration, OpenAI is likely aiming to solidify its advantage in that domain.

Finally, as with all powerful models, there are trade-offs: more compute for long reasoning tasks, possible latency, and the imperative of human oversight especially in critical systems. But by giving developers tools that are smarter and more precise, GPT-5-Codex could reduce friction in engineering, let more time be spent on higher-order design and architecture, and potentially accelerate software development cycles in many organizations.