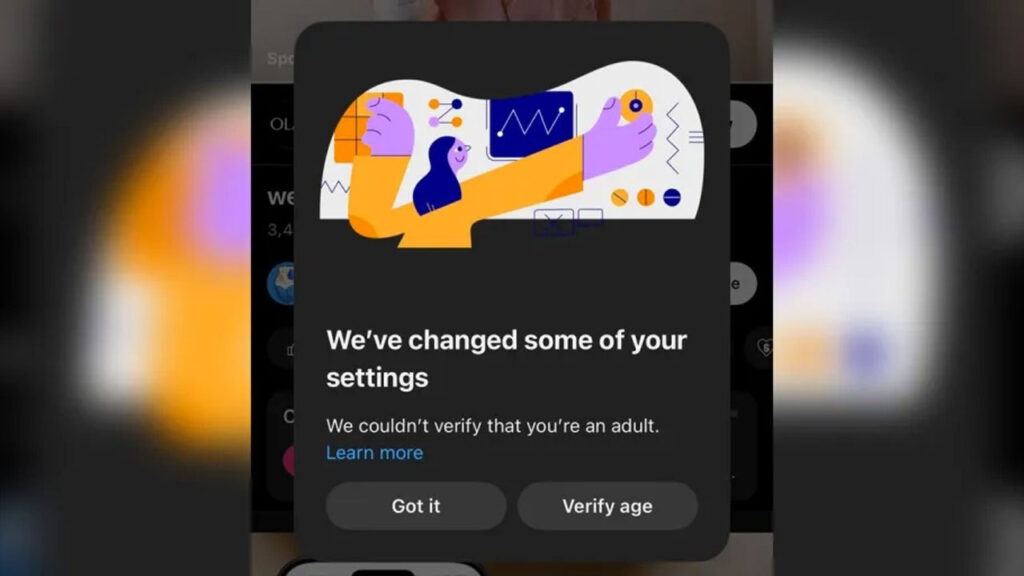

YouTube is beginning the rollout in the United States of a new artificial-intelligence system designed to estimate whether users are under 18 years old — regardless of the age they input when creating their account. The platform will analyze factors such as the user’s watch history, search queries, and the age of their account to flag those likely to be minors. If flagged as under-18, the user will be subject to enhanced protections including disabling of personalized ads, activation of digital-wellbeing tools (like screen-time reminders), and stricter content recommendations. Users who believe they have been misidentified may appeal by uploading a government ID, credit card, or taking a selfie. While the feature is being tested on a limited group in the U.S., it reflects a larger global trend toward enforcing age verification and online youth protections.

Sources: The Guardian, AInvest

Key Takeaways

– YouTube’s AI age-verification system uses behavioral signals (searches, watch history, account longevity) to infer whether a user is under 18 and applies teen-appropriate restrictions when that inference is made.

– Users flagged as minors will automatically receive less-targeted advertising, activation of digital-wellbeing features, and stricter content recommendation filters, with an appeal path via ID, credit card or selfie.

– The move is in response to mounting regulatory and societal pressure globally to better protect young users online, but it also raises concerns about privacy, mis-classification, and potential impacts on content creators and adult users.

In-Depth

YouTube’s recent announcement that it will deploy an AI-driven age verification system in the U.S. marks a significant shift in how online platforms manage user age and access. Rather than relying exclusively on the self-reported date of birth that users enter when signing up, YouTube will now employ machine-learning algorithms that analyse a variety of signals — such as the categories of videos you watch, your search history and how long you’ve had your account — in order to estimate whether you are 18 or older. If the system determines you are underage, YouTube will apply protections designed for teen users. This development comes at a time when governments are tightening regulations around youth access to online content, and platforms face increased scrutiny for how they safeguard minors on their services.

From a safety-and-policy standpoint, the logic is straightforward: Many young users lie about their age or use older siblings’ accounts, making it difficult for platforms to effectively restrict mature or age-inappropriate content. By shifting to an inferential approach, YouTube aims to close that loophole, enabling automated classification of users as minors even when they claim adult status. The protections promised include disabling personalized advertising (which is often legally restricted for minors), enabling digital-wellbeing tools such as “take a break” or bedtime reminders, and limiting repetitive or sensitive recommendations for younger viewers. In one of its blog posts, YouTube described this as an extension of features previously available only to users who had self-identified as underage — now being extended simply by estimation of age.

However, the approach is not without controversy. Privacy advocates and content creators have raised concerns over the accuracy of the AI inference and the potential for misclassification. For instance, an adult user who watches content typically favoured by younger viewers could be flagged as underage and have features of their account altered or restricted — and then be required to upload government ID, a selfie, or a credit card to verify their true age. That raises questions about how securely such verifying information will be handled, and whether some users may be discouraged from appealing the decision or simply opting out. For creators, the change could affect monetization and visibility: if a significant portion of their audience is re-classified as minors, personalized ads that tend to bring higher revenue may be replaced by less-targeted ads, potentially reducing earnings.

The broader context is also important. The move comes amid global regulatory pressure: In the U.K., for example, the Online Safety Act places significant obligations on platforms to verify user ages and prevent minors’ access to adult content. Australia is moving toward banning under-16s from using major social-media sites. YouTube’s action is part of a wave of platform responses to those pressures. Moreover, the company emphasises that it has already deployed similar machine-learning age-estimation models in other markets, and claims it is now rolling them out in the U.S. in phases — “to a small set of users” initially, with plans to expand if successful.

For media producers, influencers, and those invested in the YouTube ecosystem, this means a shift in audience dynamics. Creators whose content is accessed by younger viewers may see the algorithm adjust exposure or monetization differently. Marketers may find that ad targeting will change when a portion of viewers are classified as minors. And users themselves may need to confront the possibility that the platform knows more about their usage patterns than they assumed. For the average user over 18, the risk is minimal — unless the system errs. But for younger users, or adult users whose watch patterns resemble those of teens, the system will alter their experience significantly unless they verify their age.

In conclusion, YouTube’s rollout of AI-based age estimation reflects both a regulatory imperative and a technological evolution in content platform management. It promises stronger safeguards for younger users but also ushers in significant questions about privacy, classification accuracy, user rights and creator impact. As the system expands beyond its initial testing group, observers will be watching closely how often misclassifications occur, how the verification process is handled, and whether the promised benefits in youth protection outweigh the challenges. For those working in content creation, digital media, or platform policy — including independent producers and podcasters — this shift may require adjustment in audience strategy, monetization planning and awareness of how platform changes ripple through the ecosystem.