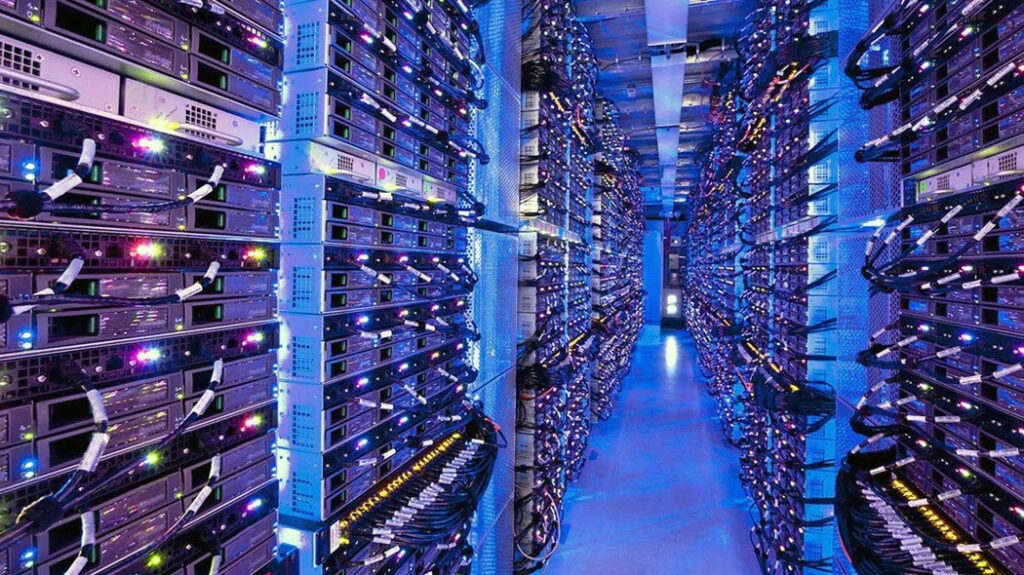

Microsoft CEO Satya Nadella recently spotlighted the fact that Microsoft already operates over 300 data centers across 34 countries, positioning Azure to absorb large-scale AI workloads even as OpenAI races to erect its own infrastructure. According to TechCrunch, each of Microsoft’s AI “factories” will house more than 4,600 Nvidia GB300 racks linked by InfiniBand, and Microsoft plans to deploy “hundreds of thousands” of such GPUs to serve OpenAI workloads. Meanwhile, OpenAI is signing major deals with chipmakers like Nvidia and AMD to secure computing capacity: Nvidia has pledged up to $100 billion in investment and chip supply to support OpenAI’s expansion, and AMD just inked a multi-gigawatt GPU supply deal that grants OpenAI warrants to acquire up to 10 percent of AMD under certain conditions. But skeptics warn much of the hype remains on paper: some large investors caution that OpenAI’s chip deals are announcements, not yet executed deployments.

Key Takeaways

– Microsoft already has deep infrastructure in place—300+ data centers globally—giving it a big leg up in scaling AI workloads compared to OpenAI building new capacity.

– OpenAI is locking in large-scale hardware deals (notably with Nvidia and AMD) that promise future compute capacity and equity upside, but the real challenge is in delivering and operating the hardware at scale.

– Announcements aren’t guarantees: industry watchers caution promises may outpace execution, especially around chip deliveries, installation, and operating costs.

In-Depth

In the current AI arms race, infrastructure is the battlefield. Microsoft’s push to underscore its existing data center footprint is a calculated move: by reminding the public (and its competitors) that it runs more than 300 data centers across 34 countries, it signals that it’s not entering the AI infrastructure game from scratch—rather, it’s scaling what’s already formidable. Microsoft intends to build “AI factories” in those centers by deploying clusters of more than 4,600 Nvidia GB300 racks, interconnected via InfiniBand, and expand that footprint with “hundreds of thousands” of such GPUs to power OpenAI workloads. That plays right into Microsoft’s central strength: being the backbone provider for AI without having to reinvent core plumbing.

Meanwhile, OpenAI is undertaking its own buildout, but in a different way: by striking massive deals with hardware providers. Nvidia’s commitment—up to $100 billion—gives it skin in the game and secures GPU supply for OpenAI’s growth. Similarly, AMD’s new agreement to supply up to 6 gigawatts of compute, starting with a 1-gigawatt deployment in 2026, is structured with warrants that could allow OpenAI to acquire as much as 10 percent of AMD over time. These deals help hedge OpenAI’s exposure to supply constraints in a hyper-competitive chip market. But the vivid announcements raise a natural question: how much of this is aspirational, and how much is grounded?

Skeptics have already flagged that these could be more headline-grabbing than operational realities. One prominent investor cautioned that such deals are “purely announcements” until chips are delivered, integrated, and running reliably. The logistical and engineering challenges of cooling, power, networking, and fault tolerance can’t be wished away. Compounding that, Microsoft itself recently confirmed it is slowing or pausing some data center projects—including a $1 billion build in Ohio—pointing to economic and regulatory headwinds in large-scale expansion.

So while Microsoft leans into the advantage of incumbency, OpenAI is betting on contractual leverage and strategic partnerships to close the infrastructure gap. The key question ahead: who can turn bold commitments into thousands of kilowatts of stable compute that operate cost-effectively under real-world workloads? The winner of that race may well be the one who can transform promises into persistent uptime.